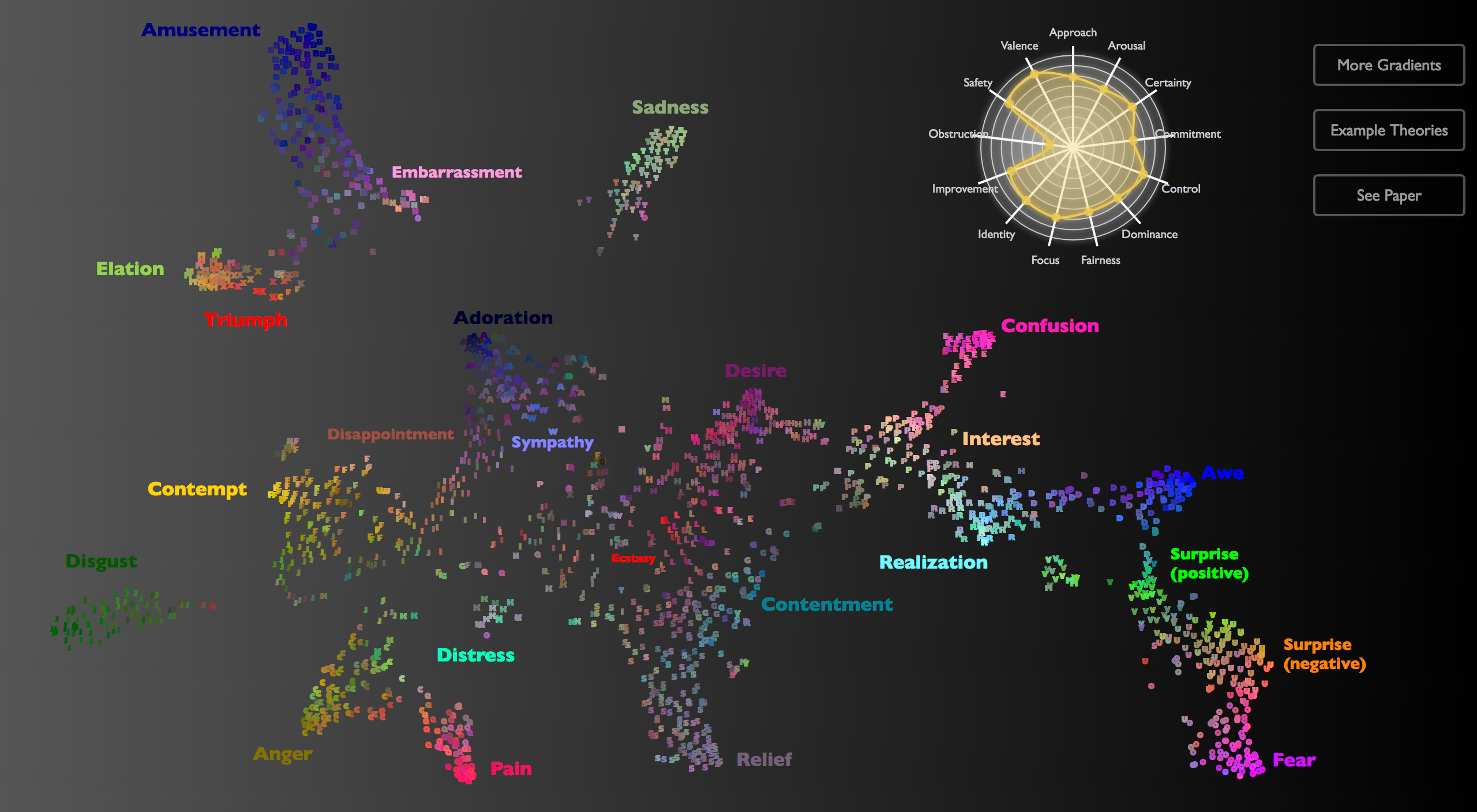

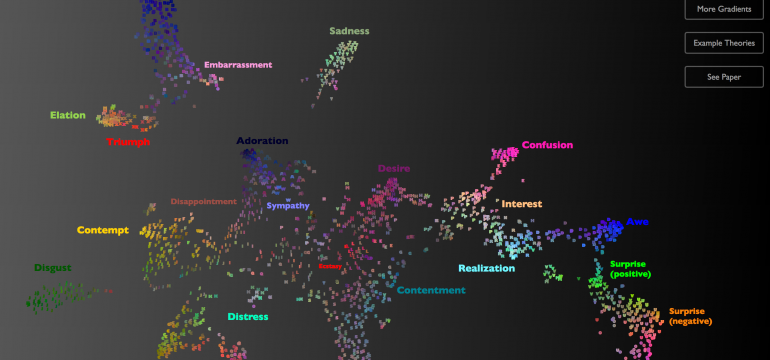

Emotional vocalizations are central to human social life. Recent studies have documented that people recognize at least 13 emotions in brief vocalizations. This capacity emerges early in development, is preserved in some form across cultures, and informs how people respond emotionally to music. What is poorly understood is how emotion recognition from vocalization is structured within what we call a semantic space, the study of which addresses questions critical to the field: How many distinct kinds of emotions can be expressed? Do expressions convey emotion categories or affective appraisals (e.g., valence, arousal)? Is the recognition of emotion expressions discrete or continuous? Guided by a new theoretical approach to emotion taxonomies, we apply large-scale data collection and analysis techniques to judgments of 2,032 emotional vocal bursts produced in laboratory settings (Study 1) and 48 found in the real world (Study 2) by U.S. English speakers (N = 1,105). We find that vocal bursts convey at least 24 distinct kinds of emotion. Emotion categories (sympathy, awe), more so than affective appraisals (including valence and arousal), organize emotion recognition. In contrast to discrete emotion theories, the emotion categories conveyed by vocal bursts are bridged by smooth gradients with continuously varying meaning. We visualize the complex, high-dimensional space of emotion conveyed by brief human vocalization within an online interactive map.

Read Article PDF «Mapping 24 Emotions Conveyed by Brief Human Vocalization»

Leave a Reply

Lo siento, debes estar conectado para publicar un comentario.