http://www.auditory-seismology.org/

Abstract

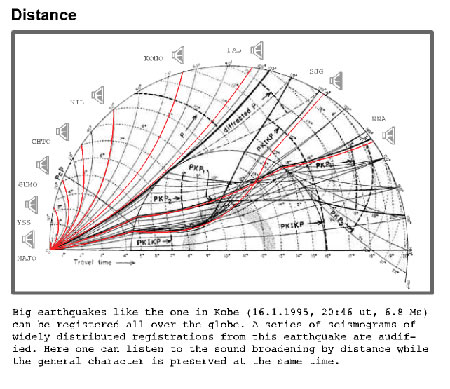

Usually seismic waves have a frequency spectrum below 1 Hz and therefore cases are rare where earthquakes are accompanied by hearable sounds. The human audio spectrum ranges between 20 Hz – 20 kHz which is much above the spectrum of the earth’s rumbling and tumbling. This is one of the reasons why seismometric records are commonly studied by the eye and visual criteria. Nevertheless if one compresses the time axis of a seismogram by about 2000 times and plays it on a speaker (so called ‘audification’), the seismometric record becomes hearable and can be studied by the ear and acoustic criteria. In the past a few attempts have been made to use audification of seismograms as a method in seismology. In the 1960’s Sheridan D. Speeth proposed it for discriminating atomic explosions from natural quakes. G. E. Frantti and L. A. Leverault rechecked it in 1965 and Chris Hayward transposed audification to seismic research in 1994. But these attempts faded away with almost no response, – in our opinion – because a more general reasoning and founding theory of «Auditory Seismology» was missing. We would like to intercede for this research topic by the following considerations:

Philosophical and psychological research results show that there is a substantial difference between seeing and hearing a data set, because both evolve and accentuate different aspects of a phenomenon. From philosophical point of view the eye is good for recognizing structure, surface and steadiness, whereas the ear is good for recognizing time, continuum, remembrance and expectation. In studying aspects like tectonic structure, surface deformation and regional seismic risk the visual modes of depiction are hard to surpass. But in questions of timely development, of characterization of a fault’s continuum and of tension between past and expected events the acoustic mode of representation seems to be very suitable.

Via: neural.it

Leave a Reply

Lo siento, debes estar conectado para publicar un comentario.